AI Accidents: Ed3 World Newsletter Issue #32

A three part issue about designing AI enabled schooling

This (almost) monthly newsletter serves as your bridge from the real world to the advancements of AI & other emerging technologies, specifically contextualized for education.

Dear Educators & Friends,

In my last issue, I promised to explore the implications of AI updates from Google & Open AI on education. I’ve begun developing an AI-enabled schooling model to broaden our perspective on what’s possible.

Before diving into the big ideas that guided the development of this work, I want to highlight a few recent news stories.

Last Friday, OpenAI disbanded their AI Safety committee, triggering several high-profile resignations. Then this week, they created a new one.

Without permission, Open AI used a likeliness of Scarlett Johansson’s voice for Omni.

Google’s AI search summaries are prioritizing popular content over accurate information, rendering this feature useless.

OpenAI announced ChatGPT Edu yesterday - a gen AI tool for universities.

There’s a lot to unpack here, but I’ll focus on three key points:

Sam Altman was previously fired by his board in part for concealing his OpenAI VC firm and indirect equity in OpenAI. Disbanding the AI Safety committee and appointing himself as lead of a new one is particularly suspect, following the controversial emulation of an actor’s voice without consent. This raises serious doubts about whether Altman or OpenAI are prioritizing ethics or safety.

With the roll out of ChatGPT Edu for universities, safety and ethics becomes a larger issue. On the exciting and anxiety inducing side, educators will likely need to rethink the entire learning model to avoid constant conversations about cheating and plagiarism.

This is Google’s second major AI faux pas, reinforcing the argument against centralization of AI. We are at the mercy of how these companies train their algorithms, and if we rely too heavily on AI for our information, we can kiss our self-governance goodbye.

Google, OpenAI, and other AI companies are not slowing down; they’re becoming unstoppable behemoths. Rather than focus on what we can’t control, my pressing question is:

How can we prepare for an unknown future with endless possibilities, but one that may threaten our self-governance, safety, and ethical values?

I’ve been working on several big ideas that might help us shape a desirable future in education. We can consider these parameters as we develop a vision that avoids past mistakes and promotes autonomy and agency.

I’ve divided this piece into three parts. Part 1 covers two big ideas, part 2 introduces two more, and part 3 will propose an AI model for schooling. Let’s dive in!

Part 1

Unintended Designs

“In designing for convenience, we accidently designed for loneliness” - Shuya Gong

In a previous issue about teachers, I argued that most Edtech solutions, especially those developing generative AI tools, are not actually improving our profession, but merely addressing short-term, day-to-day survival. When we provide teachers with automated lesson planning or grading tools, we aren’t enhancing the profession to improve teaching and learning. Instead, we’re perpetuating the status quo of a system that fails by it’s own measures.

We really must understand what we’re solving for before integrating technology into learning. Since I’ve spoken at length about this, I want to introduce another idea that we don’t often consider.

What externalities are we designing for by solving the problem?

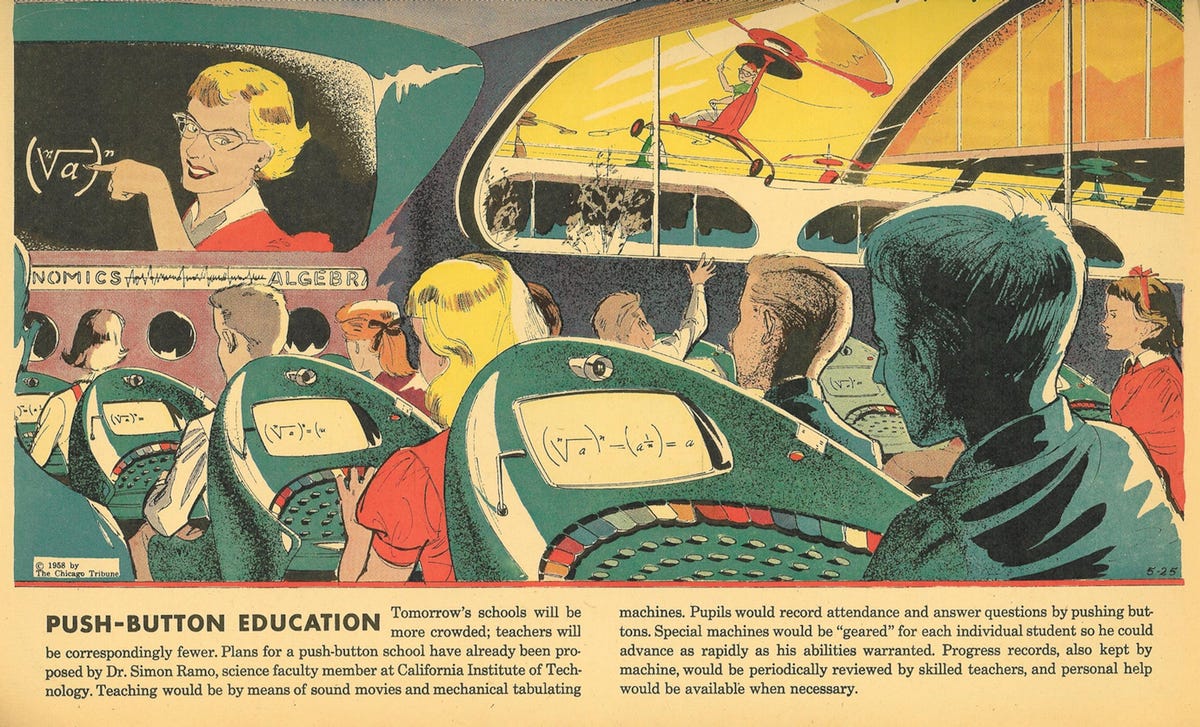

In the 1920’s, we predicted videotelephony, home computers, electric cars, synthetic foods, and automated learning. Over the past 50 years, we’ve benefited greatly from these technologies.

However, we didn’t foresee obesity caused by addiction to artificial foods, depression and social isolation caused by social media, and election swings caused by rampant internet misinformation.

In this age of generative AI, many of us are rosy-eyed about personalization, automation, and transformation of our industries. Many are designing AI apps haphazardly to gain market dominance and bring new innovations into the field.

Facebook was created to simply connect people. Fossil Fuels were innocently used to keep warm. Plastic was meant to be an affordable substitute for ivory. Yet, these technologies have, in many ways, poisoned the world both physically and intellectually.

So here’s the first big idea for designing our ideal, AI enabled education ecosystem.

➡ Big idea #1: After we identify the right problem, we need to map the potential externalities of the solution.

AI’s existential and immediate threats like misinformation and weaponization have become part of our zeitgeist. But on a more nuanced level, what behaviors are we unintentionally designing for with AI tools, in our current societal conditions?

of IDEO & Harvard puts it beautifully. She quotes E.O. Wilson: “We live in a society where there are paleolithic emotions, medieval institutions, and godlike technology”. On the human level, we operate from thousands of years of inherited evolution and genetics incentivizing two things - survival and pleasure. On the societal level, we add on hundreds of years of cultural inheritance and social incentives. And with new technologies, we layer in economic and power incentives.These three layers are often misaligned, and the human condition born from these parallel incentives impacts the consequences of technology.

Let’s apply this to AI in education.

Khan Academy is promoting a personal tutor for all. Google’s AI summaries are doing the heavy lifting for us. Magic AI is speeding up lesson planning. There’s merit to all of these advancements, but…

In designing for personal tutors and individualism… are we accidentally designing for social isolation?

In designing for AI summaries, are we accidentally designing for loss of critical thinking?

In designing for automated lesson planning, are we accidentally designing for loss of agency or maybe even the degradation of the teaching profession?

If you analyze Sal Khan’s video of ChatGPT4 Omni tutoring his son Imran, you’ll notice the personal tutor walks Imran through the entire problem step by step. However, there’s little indication that Imran learned anything. As humans who seek pleasure and survival, any student would lean on this personal tutor to complete assignments quickly, leaving more time for leisure activities.

Of course, there’s potential for improvement here, but that’s exactly the point - we need to understand the externalities of our inventions before proliferating them.

And one more thing - if we wait for data before preparing for consequences, it might be too late.

Misaligned Designs

Recently,

explored a similar idea from a different perspective, analyzing the use of generative AI for personalized word problems. He concluded that it’s largely ineffective.In his newsletter, he stated, “the words are personalized but the student’s work isn’t personal. Success on both worksheets looks like getting the same answer as everyone else. The student imprints very little of themselves on either worksheet. Consequently, the student feels wasted and unwanted in both.”

His point is clear: we’re designing experiences with AI in a way that optimizes AI, not the human experience.

This ties back to my earlier argument about solving for the right problem.

➡ Big idea #2: Let’s design for the human experience, not the optimization of technology.

The SAMR or RAT frameworks remind us that merely substituting technology for our already flawed practices will not make teaching and learning better.

At this point, our adoption of AI is really at the substitution phase. Educators and students are using AI to do faster what they were already doing. And to Dan’s point, some of us are using AI to do what AI does well, with little understanding of whether it’s actually improving our lives.

Very few of us are using AI to redefine learning or enhance our own cognitive abilities.

This seems to be a natural evolution of technology. Redefinition requires a new way of thinking and when you’re just trying to survive, it’s difficult to move beyond necessity. It takes time for humans to reimagine their practices and to move up the SAMR ladder.

In the Next Issue…

I’ll let you take a breather here until our next issue, where I’ll introduce two more big ideas to help us create a framework for envisioning a practical, outcomes-based schooling model enhanced by AI.

Thanks for ideating with me.

Warmly yours,

Vriti

I’m Vriti and I lead two organizations in service of education. Ed3 DAO is a community for educators learning about emerging technologies, specifically AI & web3. k20 Educators builds metaverse worlds for learning.

Ooof sorry for the typos in the email version! I really should be using AI more diligently to grammar check :)

Hi Vriti. Another parent I know, a wealthy one, sends his daughter to an Acton Academy. It seems to be a franchise. As I understand it, students are on personalized learning paths through educational apps and some class activities in the morning, with projects set for the afternoon. But there isn’t a need to separate students by grade levels or abilities with this model. Khan Academy’s physical school in California, and other progressive private schools, also follow a similar model.

My own daughter learned to read English at quite an early age, in part because I sat with her nearly every day for 10-15 minutes on the Khan Academy Kids app, often letting her choose something new to learn on the app, … often with a walk and drink at the convenience store. This is in Taiwan, where most people don’t speak English, as it’s not a priority just yet.

Anyway, the point is, that students can learn so much faster with apps with mastery built in, and if the sage on the stage(the teacher) becomes more of the guide on the side.

I appreciate how you presented the models here, and especially that quote. So I look forward to your follow-up article, and what you include there as solutions.