AI Externalities: Ed3 World Newsletter Issue #33

A few interesting updates from the AI & Edu world!

This (almost) monthly newsletter serves as your bridge from the real world to the advancements of AI & other emerging technologies, specifically contextualized for education.

Dear Educators & Friends,

It’s been a hectic summer with the growth of our little non-profit, Ed3 DAO. We have a full-time team now (yay!) working on high quality courses for educators on emerging technologies and research-based pedagogies. With our university partners, we’ve distributed over 20,000 Continuing Education Units to educators nation-wide and we’re excited to launch a new series of courses related to AI this Fall.

I am so grateful to the almost 6,000 of you who have been following our journey through this newsletter. Let’s get back into Ed3 World with some fun updates from the world of AI.

A few recent news stories:

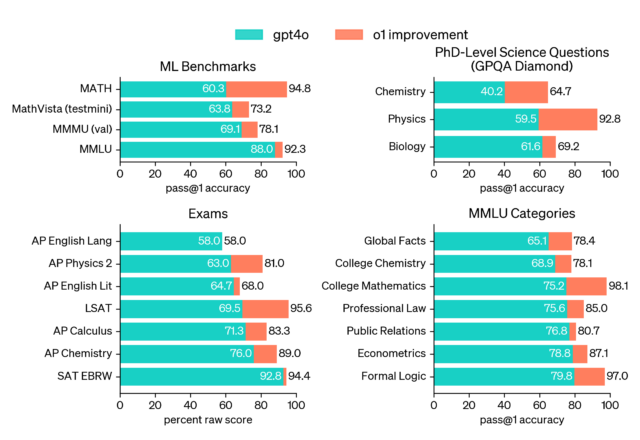

Open AI’s new model, Strawberry was just released and claims to logically reason like humans can. Some o1 improvements are represented in the chart below.

🙄 If you know me, you know what I have to say about this. Beware of the marketing lingo here - AI systems are getting better at simulating meaning making, but they are not actually making meaning like humans. “Reasoning” through a complex problem requires many levels of understanding and AI’s pattern matching may be optimized to make connections but the human experience has yet to be emulated by robots. Hugging Face CEO Clem Delangue, agrees.

Gaming company Altera put 1000+ autonomous AI agents in Minecraft and they created their own democracy.

😍 I absolutely love this because 1) it gives you a clear idea of what AI “thinks” (thinks = pattern matching using data) of humanity, 2) it gives us the ability to simulate scenarios with various character personas for a variety of industries (especially education), 3) it clearly conveys the limitations of agents and their “reasoning” abilities, and 4) it’s just super fun to watch. I promise you, in the next 10 years, this will become one of the most important teaching tools for classes related to Economy, Ecology, Marketing, Government Studies, and Science.

Common Sense Media assessed some top AI tools for their risks to students. TL;DR: Anthropic prevailed while Perplexity failed.

👏🏽I am so glad Common Sense Media did this because it’s the start the Evaluation Phase of technology - by creating norms about what we expect of tech products and then continuously evaluating them against those norms, we’re setting standards for new products. I want to see more companies doing this type of evaluation from a number of angles and testing products for their safety. We shouldn’t rely on one party or one set of principles.

Andreessen Horowitz released the top 100 (50 web, 50 mobile) most visited Gen AI products.

🧐 It was quite interesting to see that companion and character generation AI products represented 25% of the top 20 web apps. Most of these apps are incredibly useful for educators so I highly recommend you check them out. (Beware of a few inappropriate ones!)

The White House announced a self-regulated voluntary commitment from tech companies to combat deep fakes.

😧 I’m wondering: Why isn’t this a mandated regulatory policy instead of a self-monitored voluntary commitment?

In my last post, I started introducing some big ideas that might help us shape a desirable future in education in the age of AI. We can consider these parameters as we develop a vision that avoids past mistakes and promotes autonomy and agency.

➡ Big idea #1: After we identify the right problem, we need to map the potential externalities of the solution.

➡ Big idea #2: Let’s design for the human experience, not the optimization of technology.

Let’s put these first two big ideas in action using these latest stories.

What are the externalities of creating an LLM that claims to “reason like a human”?

If companionship and character generation is a big hit for AI usage, what does that tell us about our current and future human experience?

Deep fakes are an unintended externality of AI. If the government is unwilling to take a regulatory stand against them, what types of digital experiences are we designing for?

If we ask these questions critically - within our companies, to our government officials, within our classrooms, and within our social groups, we can attempt to avoid perpetuating mistakes of the past and planning for our future.

In the Next Issue…

I’m easing back into writing so I’ll spare all of us a super lengthy post before the weekend. This month, I’ll introduce two more big ideas to continue my last thread on envisioning a desirable future for education.

Thanks for your critical lens.

Warmly yours,

Vriti Saraf

I’m Vriti and I lead two organizations in service of education. Ed3 DAO is a community for educators learning about emerging technologies, specifically AI & web3. k20 Educators builds metaverse worlds for learning.